Anti toxic comment

Toxic comment is very harmful to a health community but we don't want moderator to spend a lot of efforts to maintain it. Thus we built a machine learning system to detect toxic comment, if it is toxic it'll be blocked by our system by default.

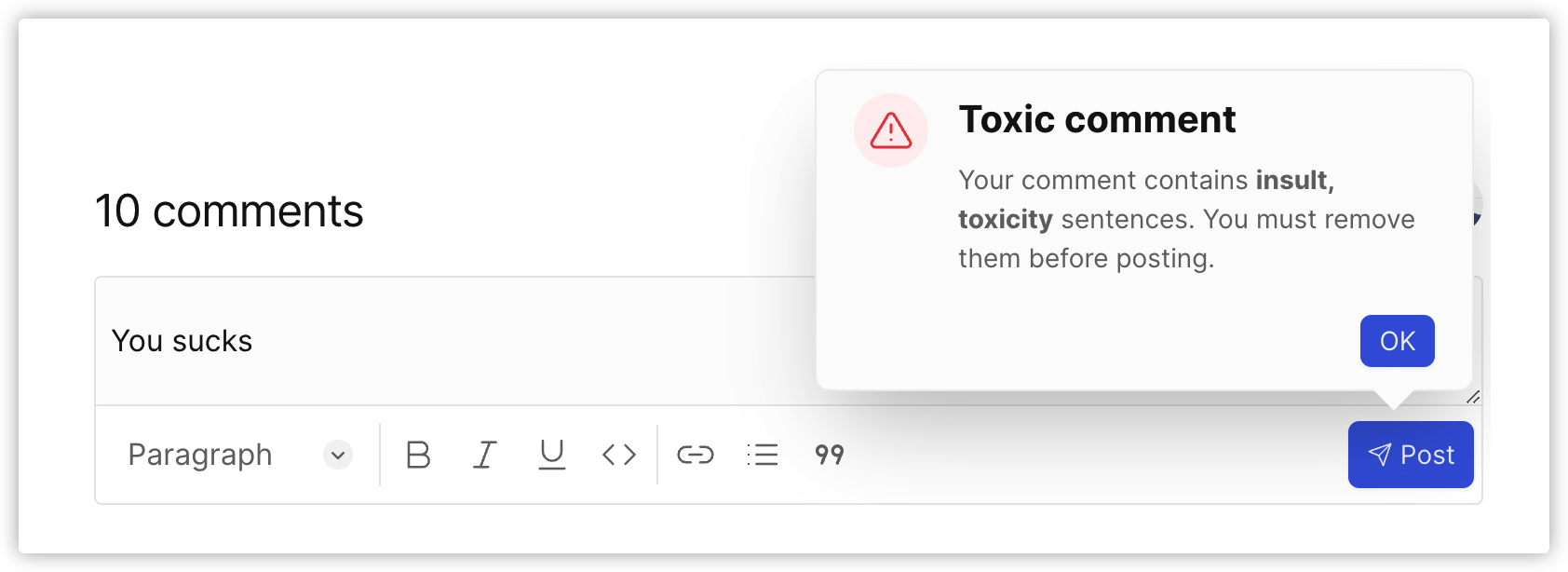

Example

There is a blocking popup that appears when a user posts a toxic comment.

Supported toxic labels

We use machine learning technologies to detect toxic comments. Currently, we support the following toxic labels:

- toxicity

- severe toxicity

- identity attack

- insult

- threat

- sexual explicit

- obscene